Medical Care & Mental Health

The Big Picture: Health & Welfare

Health and medical care shape military service and wars. For centuries, battles have been won or lost based not just on how many succumb to an attack by declared enemies wielding weapons, but also based on how many succumb to powerful pathogens and lingering aftereffects of injuries. Threats encountered, and services offered in the field and in hospitals near lines of battle or on home fronts, can bolster or undercut military strength. Indeed, observers measure a war’s impacts—and whether militaries have succeeded or failed—in part, based on numbers of service members injured and killed.

On a more granular level, health is personal, and military medical care constitutes a social interaction. The long-term physical and mental well-being of service members can be deeply impacted by experiences encountered during service. As part of a military hierarchy, soldiers have limited control over the conditions to which they are exposed and the care that they receive. Meanwhile, in a setting like the US Armed Forces, health professionals who serve must balance the goals of increasing the efficiency of the military and pursuing professional development, with the moral obligation to, as the Hippocratic Oath says, “do no harm” to their patients.

World War II service members lived through an inflection point in the history of medicine and warfare. In all previous US wars, non-battle deaths—related to conditions like smallpox, typhoid, dysentery, yellow fever, tuberculosis, and influenza—outnumbered battle-related fatalities. During the Spanish-American War, more than 2,000 of the approximately 2,400 deaths were due to causes other than battle. During World War I, 53,000 died due to battle versus 63,000 who died due to other causes. World War II marked the first time the ratio was reversed. Of 16.1 million who served, 405,399 died—291,557 of them in battle, and 113,842 due to other causes.1

A variety of factors contributed to the shift. Crucially, during World War II, the government mobilized relatively expansive public, professional, and private resources to enhance health-related research and development, as well as services offered by the Army Surgeon General’s Office, which oversaw care for soldiers. Also, rather than creating mobilization and treatment plans from scratch, the military health apparatus built on knowledge and administrative infrastructure developed during and after prior conflicts.

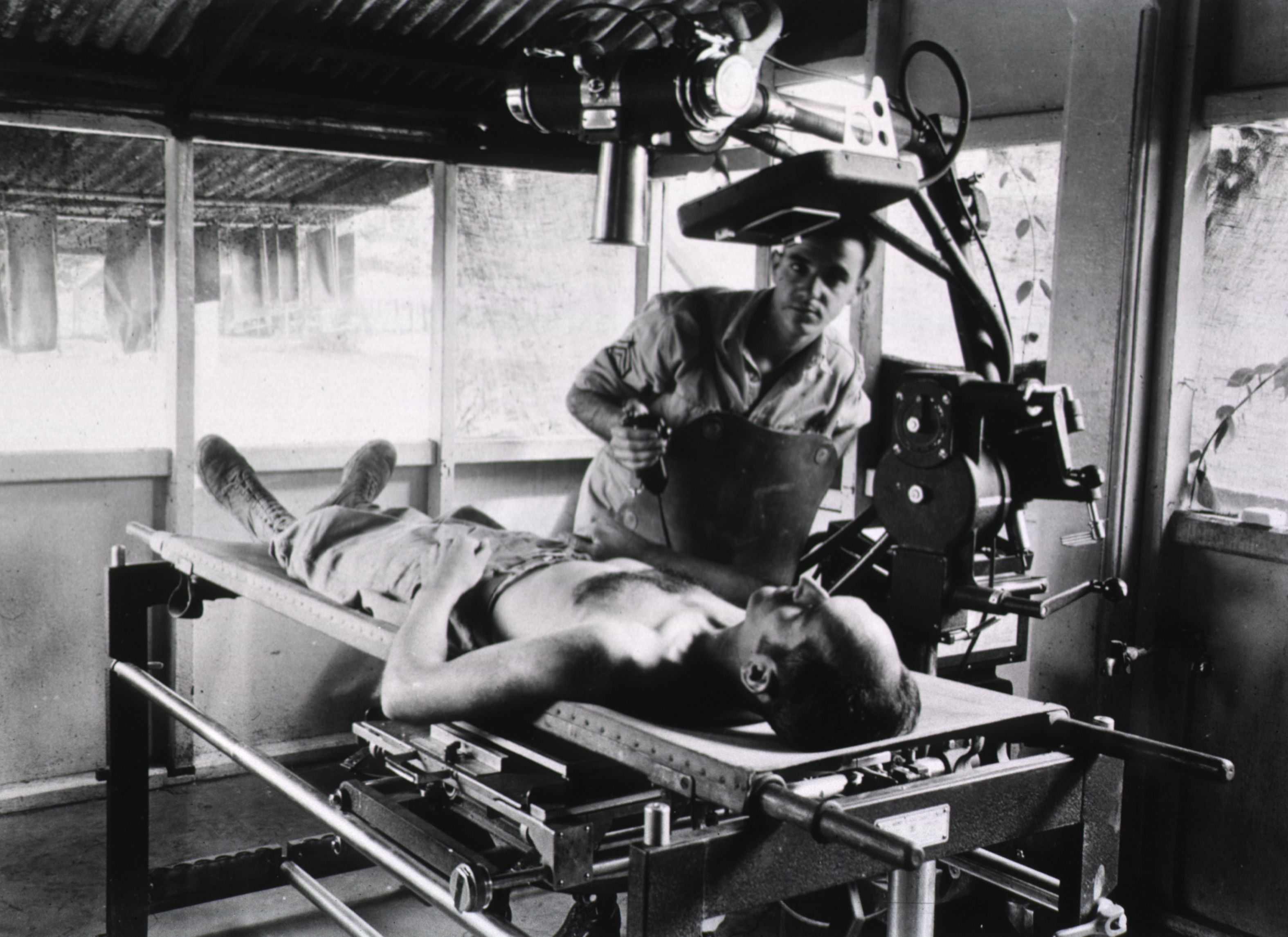

"X-ray procedure at the 1st Field Hospital, Milne Bay, New Guinea," 1943. Courtesy of NLM, Unique ID 101443190.

Here, I focus on malaria, venereal disease, and combat stress—three conditions of particular concern to army officials, and much discussed in The American Soldier in World War II’s collected surveys—to highlight key aspects of the relationship between health and military service. The army’s approach to these challenges demonstrates that war leads to opportunities for medical discovery and progress, but also brings about ethical challenges and tremendous human suffering. An examination of these illnesses shows, too, that medical services during wars are not static; militaries adapt their approaches, sometimes rendering conditions less dangerous over time. But even as advances are celebrated, ideas about health and the experience of medical care on the ground level are governed by military hierarchy and values, and the social norms and biases of larger society.

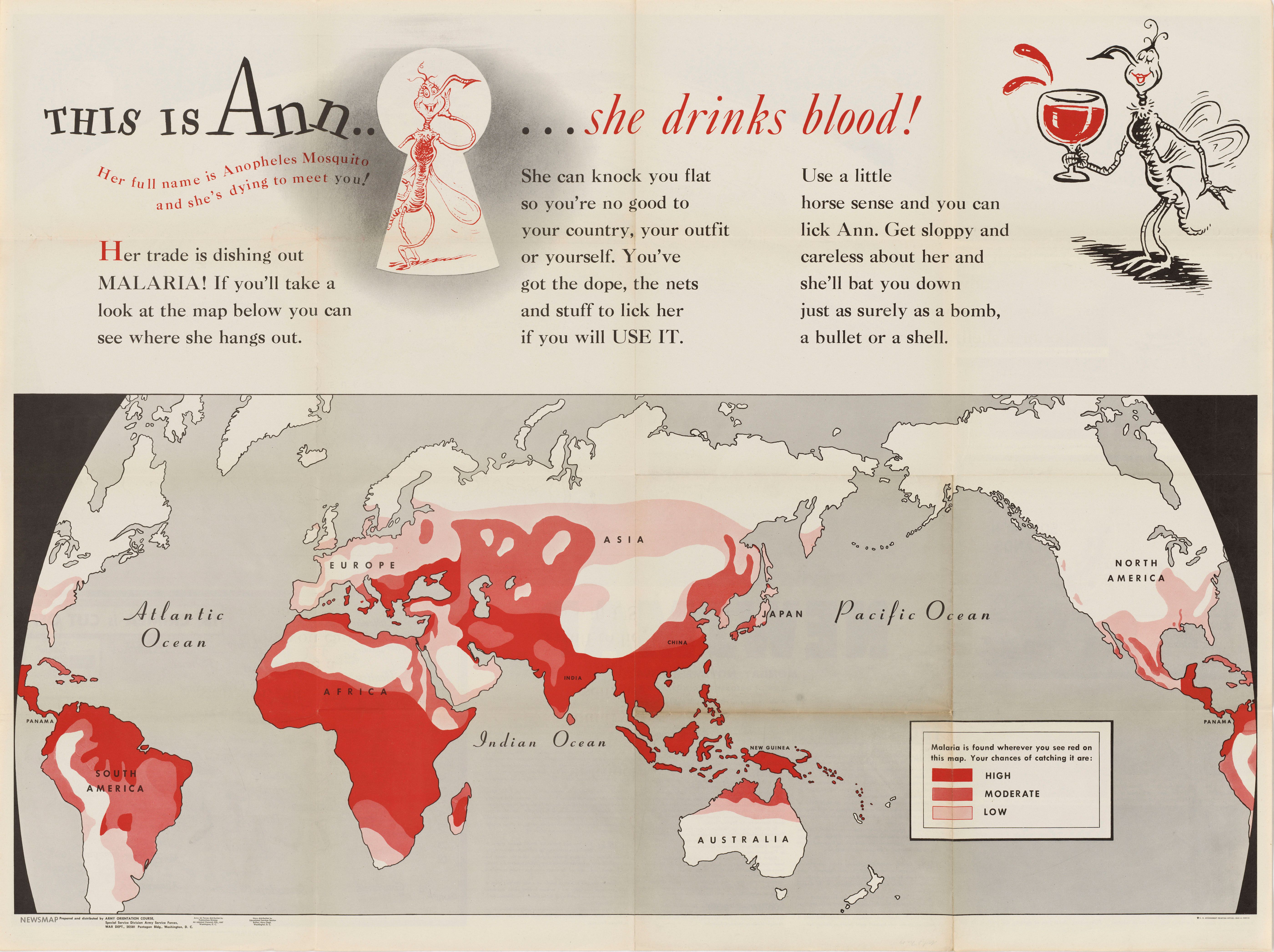

“This is Ann… she drinks blood!” Newsmap 2, no. 29 (8 Nov. 1943). Courtesy of NARA, 26-NM-2-29b, NAID 66395204.

Zooming In

Malaria

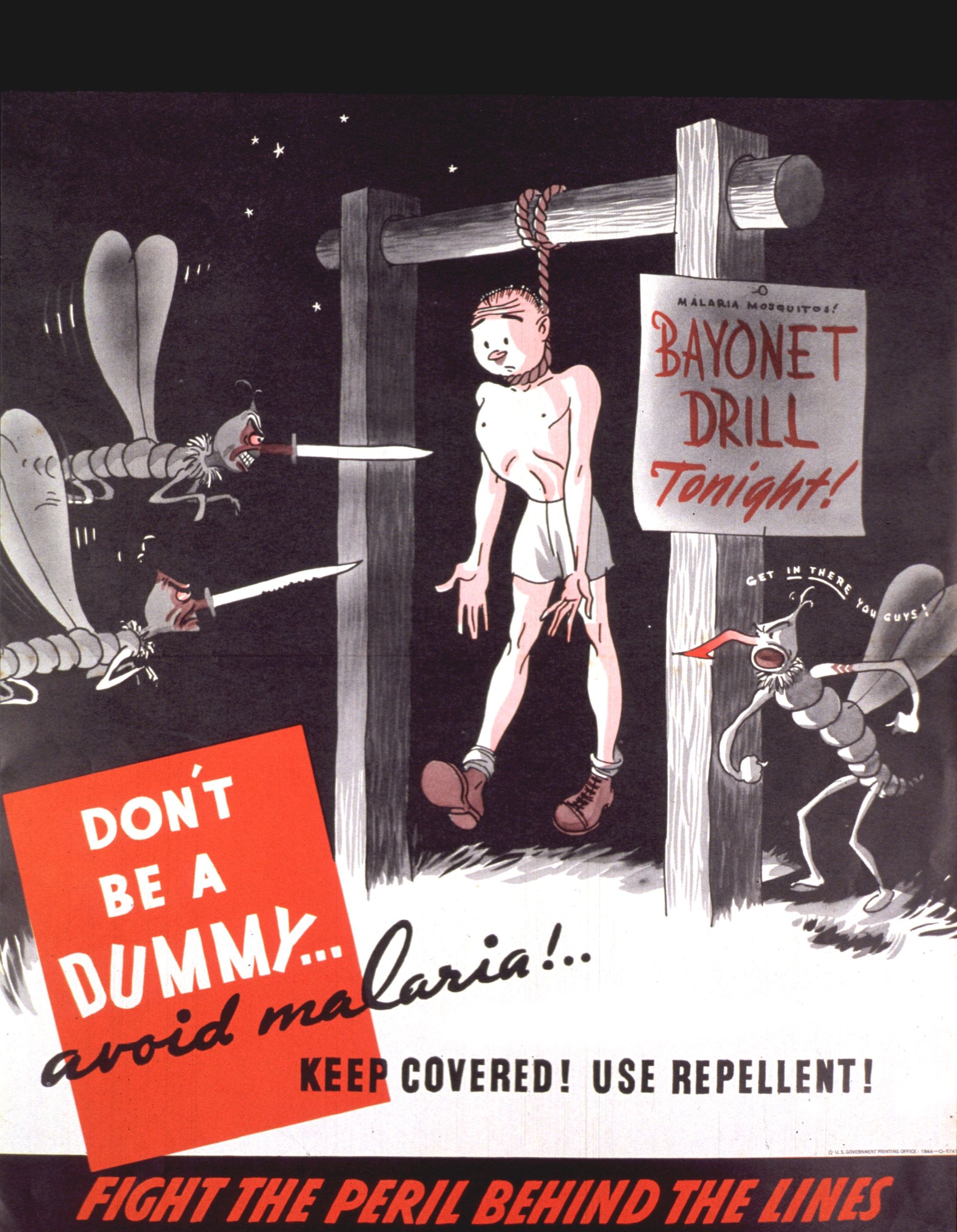

“Don't be a dummy—avoid malaria: keep covered, use repellent!” 1944. Courtesy of NLM, Unique ID 101454784.

Like so many aspects of army life, medical risks and treatments during World War II were contingent on the nature and location of one’s service. In the Southwest Pacific, where death rates due to disease were highest, soldiers faced scourges like malaria, as well as tsutsugamushi fever, poliomyelitis, and diseases of the digestive system. In the northern theater—Alaska, Canada, Greenland, Iceland—threats included cold injuries like frostbite and trench foot.2 Neuropsychiatric disorders and venereal disease were widespread, regardless of where one served, including among those in the United States.

Malaria had for generations posed a threat to wartime operations, not to mention the well-being of service members and civilians alike, and it was of central concern to military officials and medical personnel during World War II. Army doctor Paul F. Russell recalled after the war an earlier statement from General Douglas MacArthur, who had reported that he “was not at all worried about defeating the Japanese, but he was greatly concerned about the failure up to that time to defeat the Anopheles mosquito,” the vector for malaria. By war’s end, more than 490,000 soldiers had been diagnosed with malaria, equating to a loss of approximately nine million “man-days.”3

The story of the quest to quash the centuries-old ailment highlights how war-related medical research could propel ongoing efforts for biomedical advances. When the war began, soldiers were mainly given quinine to defend against malaria, but medical professionals questioned its effectiveness, and soldiers complained of its detrimental side effects. By 1942, supplies of the drug dried up after the Japanese Army blocked access to the Asian island housing the plant that served as its main ingredient. Driven by the long-standing challenge to eradicate a powerful pathogenic foe, and the real-time needs of the standing army, experts in the public and private sectors took action. Funded by the US government, they cooperated to test and use the new drug therapy, chloroquine, and the chemical insecticide dichloro-diphenyl-trichloroethane (DDT). Each played important roles in combating not just malaria but other mosquito-borne diseases as well.4

The path to progress, however, was paved with suffering—and not just among those who languished in the field with tired legs and other disturbing symptoms of malaria. Incarcerated people, people institutionalized with mental illnesses, and conscientious objectors served as human subjects in the studies necessary to test and prove the efficacy of new drugs. They were just some of the vulnerable groups coerced to participate in health experiments in the name of the greater war effort. Meanwhile, men, women, and children who lived near front lines—not to mention military veterans who served in combat zones—suffered the long-term impacts of exposure to chemicals like DDT. Reducing the threat of malaria and other war-related conditions cost precious human resources.5

Venereal Disease

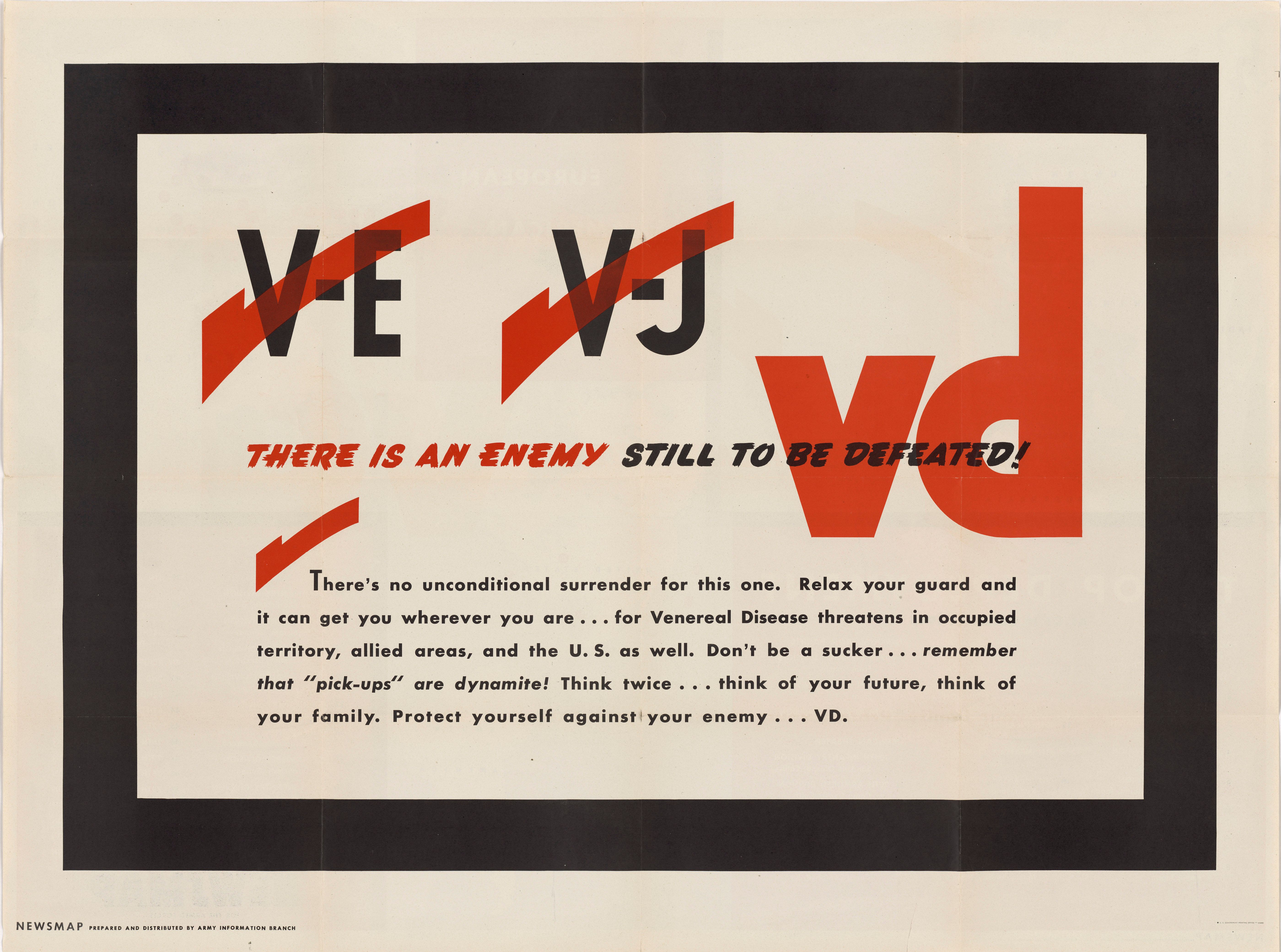

While malaria was a tremendous threat, it was not the most common infectious disease diagnosis among those admitted to hospitals. That distinction was held by a series of venereal diseases (VD), such as syphilis and gonorrhea. VD accounted for 1.25 million hospital admissions between 1942 and 1945—the equivalent of 49 of every 1,000 admissions.6 Although pervasive, the danger of venereal disease, like that of malaria, generally decreased during the course of the war. That was so because army officials—informed by experiences during previous conflicts—increasingly rejected prudishness, and approached VD transmission as a medical, rather than a moral, problem. By the final years of the war, they had access to new antibiotics that were highly effective in treating sexually transmitted diseases.

Government-run efforts to control VD were wide-ranging and somewhat scattershot—focused both on the behaviors of service members and those with whom they may have sexual encounters. The War Department issued a firm notice in December 1940 after hearing that medical officers were “examining inmates of houses of prostitution as a protective measure in safeguarding enlisted personnel against venereal disease.” Army Surgeon General James Magee declared: “It is recognized by those interested in public health that the attempted segregation and regulation of prostitution is of no public health value… unrelenting war must be waged on prostitution…” For its part, the US Congress passed the soon-to-be selectively enforced May Act in 1941, barring prostitution in the vicinity of military installations. Meanwhile, the army gradually expanded its use of posters and pamphlets offering information about sexual hygiene, and distributed prophylactic kits to soldiers. In 1944, an act of Congress repealed a 1926 policy stipulating that soldiers who had VD could be disciplined and lose pay, which enabled more proactive management of the diseases. In some European zones, for example, the army worked in concert with local authorities to establish contact tracing programs.7

In the case of VD, like malaria, medical discovery offered an advantage to the Allies. Late in the war, a sweeping and unprecedented government-funded research program bore fruit, and penicillin—a powerful antibiotic that could defend against the ravages of sexually transmitted diseases, among other ailments—became accessible en masse. Prior to the wide availability of penicillin, even amidst the army’s ground-level efforts at prevention and control, hospital admissions for VD continued to be prevalent. By 1945, patients were much better off than their counterparts just a few years earlier. Treatment time for VD went from multiple weeks to mere days. In the closing months of the war, the army was even offering penicillin to “selected civilian women” in France.8

“V-E. V-J. VD. There is an Enemy Still to be Defeated,” Newsmap 4, no. 36 (24 December 1945). Courtesy of NARA, 26-NM-4-36b, NAID 66395400.

Combat Exhaustion

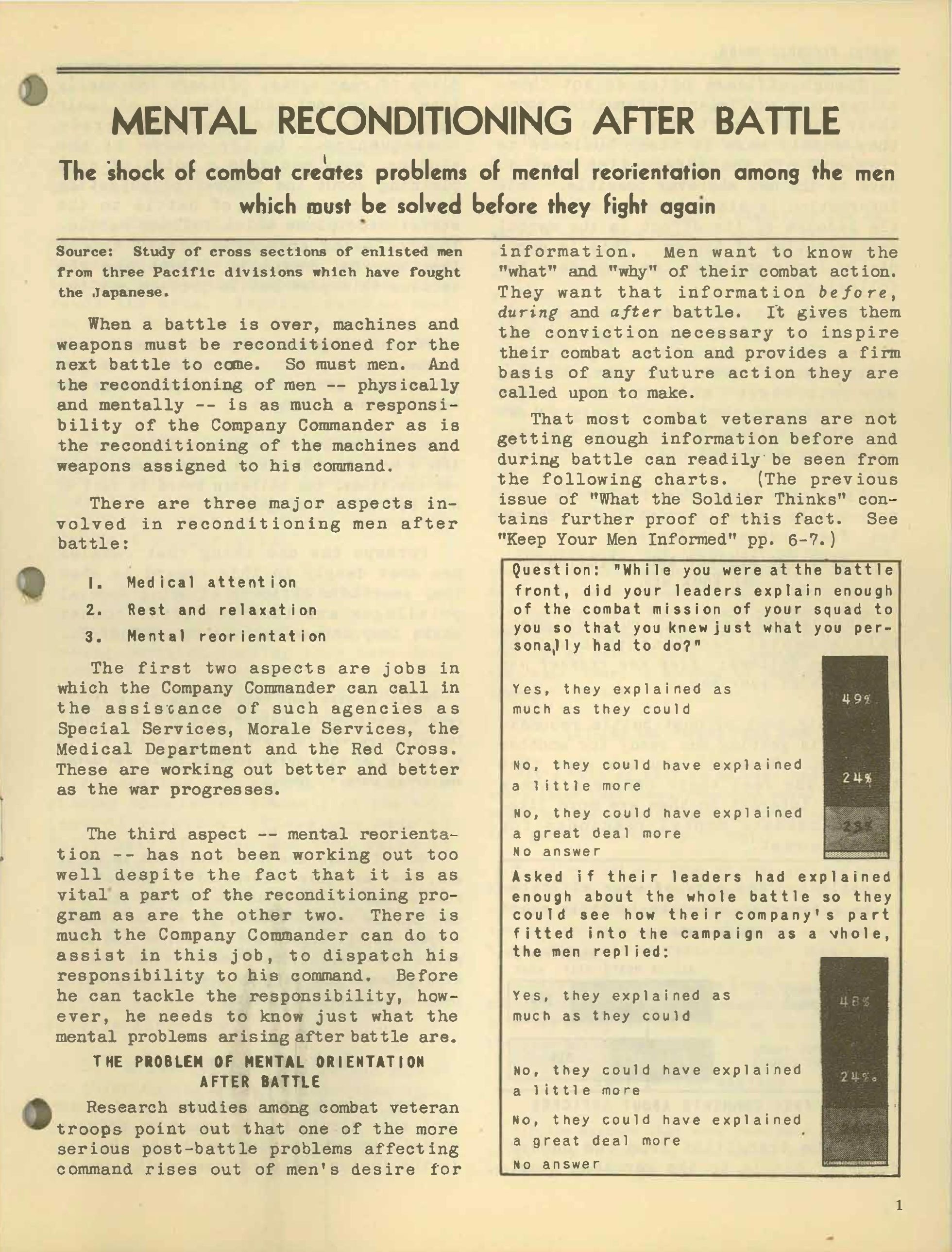

The World War II management of mental health care, too, shows how treatment changed over time, and how wartime experiences could impact individual well-being and broader understandings of disease. In an effort to alleviate high rates of discharges due to mental illness evident among the soldiers of World War I, the army initially relied on extensive medical screening procedures intended to weed out those with predispositions to so-called breakdown. Between 1941 and 1944, more than 10 percent—roughly two million of 15 million examined men—were excluded from service; 37 percent of those dismissals were made based on neuropsychiatric findings. Still, diagnoses of mental “disorders” within the military catapulted well beyond expectations. A total of one million soldiers were admitted for neuropsychiatric illness, constituting approximately 6 percent of all wartime admissions. Within two years of American entry into the war, it was clear that so-called combat stress or “exhaustion” would pose a major threat to soldiers and the army they served—as it had during prior generations. Experiences and realizations of the World War II period had important implications for the future of military medicine.9

Army officials began devoting more resources to neuropsychiatric treatment because of an imperative to increase return-to-duty rates, but long-term impacts of care on individual service members were questionable. In early 1943, military psychiatrists noted that men in the Tunisian campaign diagnosed as “psychiatric casualties” were generally lost to their units after being transferred to distant base hospitals. To increase retention, they instituted principles of “forward psychiatry” that had been adopted by World War I-era armies—and henceforth largely disregarded by World War II planners in the United States: treat patients quickly, in close proximity to battle, and with the expectation that they would recover. After army psychiatrist Frederick Hanson reported in the spring of 1943 that 70 percent of approximately 500 psychiatric battle casualties were returned to duty thanks to this approach, it was gradually adopted in other theaters. Still, military psychiatrists acknowledged the method was hardly a panacea. Systematic follow-up studies were lacking, but one contemporary account noted that many who underwent treatment were unable to return to combat, and some who did “relapsed after the first shot was fired.’”10

“Mental Reconditioning after Battle,” What the Soldier Thinks, no. 8 (August 1944).

Psychiatrists’ observations about World War II soldiers with so-called “combat exhaustion” had a variety of major long-term implications. First, they validated the long-held idea that war could have devastating impacts on individuals’ mental health. Second, they offered insights about how small group cohesion—perhaps more than the military's stated mission—could serve as a motivator to fight and act as a defense against the onset of mental ills. Third, they made it clear that, despite the early focus on screening for a “predisposition” to breakdown, the conditions of war could weaken even the “normal mind.” Their conclusions undergirded the army’s reliance on shorter tours of duty in future wars, since World War II soldiers’ years-long tours of duty were believed to increase the risk of inducing combat stress. Finally, because 60 percent of psychiatric diagnoses during World War II occurred among soldiers who were stationed in the United States, practitioners concluded that mental breakdown could be prompted by low morale and distrust of the military and fellow personnel—not just by combat.11

Health & Welfare—And The American Soldier

The treatment of malaria, VD, and mental trauma reveal that, on the broadest level, wartime medical care and research can yield discoveries benefitting military operations and personnel, as well as civilians. But the American Soldier surveys allow us to see that perceptions of care could be shaped not primarily by theoretical and research advances, or access to specific drug therapies, but by daily lived experience. While the great majority of the hospital patients surveyed by Stouffer’s team reported being satisfied with health services and personnel, some voiced reservations (PS4 & S193). Black soldiers shared particular concerns: “We is not treated good at all,” one respondent wrote. “I would rather have a color doctor like we have at home.” Others were bothered by an allegedly detached medical culture. “The Army would be much better off if more inlisted [sic] men were officers rather than college ‘graduates’ who know nothing about Army life.” Some doctors failed to regularly make rounds, one service member said, “not realizing how important it is to the [patient] involved.” Health-related interactions took place within a system that reflected not just the promise of science and medicine, but also the hierarchy of the military, and the complex dynamics and inequities of larger society. War, clearly, created diverse forms of medical devastation, as well as far-reaching medical triumphs.

Jessica L. Adler

Florida International University

Further Reading

Justin Barr and Scott H. Podolsky, “A National Medical Response to Crisis: The Legacy of World War II.” New England Journal of Medicine 383, no. 7 (2020): 613–15.

Roger Cooter, Mark Harrison, and Steve Sturdy, eds. Medicine and Modern Warfare (Rodopi, 1999).

Albert E. Cowdrey, Fighting for Life: American Medicine in World War II (The Free Press, 1994).

Mary C. Gillett, The Army Medical Department (Center of Military History (3 vols., 1981–2009. (Gillett authored three volumes under this title, covering 1775-1818, 1818-1865, and 1865-1941. The latter is available here.)

Bernard D. Rostker, Providing for the Casualties of War: The American Experience Through World War II (RAND Corporation, 2013).

Charissa J. Threat, Nursing Civil Rights: Gender and Race in the Army Nurse Corps (University of Illinois Press, 2015).

Bobby A. Wintermute, Public Health and the U.S. Military: A History of the Army Medical Department, 1818-1917 (Routledge, 2011).

Select Surveys & Publications

PS-IV: Attitudes toward Medical Care

S-74: Neurotic Screen Test (A Pretest)

S-77: Hospital Study

S-108: Neuropsychiatric Study

S-126: NP Study

S-146: VD and Malaria (Magazine Questions)

S-193: Hospital Survey

What the Soldier Thinks, no. 6 (May 1944)

What the Soldier Thinks, no. 8 (August 1944)

What the Soldier Thinks, no. 13 (April 1945)

- Vincent J. Cirillo, “Two Faces of Death: Fatalities from Disease and Combat in America’s Principal Wars, 1775 to Present,” Perspectives in Biology and Medicine 51, no. 1 (2008): 121–33.

- Frank A. Reister, Medical Statistics in World War II (Washington, D.C.: Office of the Surgeon General, Department of the Army, 1975), 31–46.

- Paul F. Russell, “Introduction,” in Medical Department, United States Army in World War II, Preventive Medicine, vol. VI, Communicable Diseases: Transmitted Chiefly through Respiratory and Alimentary Tracts (Washington, D.C.: Office of the Surgeon General, Department of the Army, 1963); Cirillo, “Two Faces of Death.”

- David Kinkela, DDT and the American Century: Global Health, Environmental Politics, and the Pesticide That Changed the World (Chapel Hill: University of North Carolina Press, 2013); Leo Slater, War and Disease: Biomedical Research on Malaria in the Twentieth Century (New Brunswick: Rutgers University Press, 2014); and Oliver R. McCoy, “Chapter 2: War Department Provisions for Malaria Control,” in Medical Department, United States Army in World War II, Preventive Medicine, vol. VI.

- Karen M. Masterson, The Malaria Project: The U.S. Government’s Secret Mission to Find a Miracle Cure (New York: Penguin Random House, 2015). For more on World War II-related medical experimentation, see Susan L. Smith, Toxic Exposures: Mustard Gas and the Health Consequences of World War II in the United States (New Brunswick: Rutgers University Press, 2016).

- Reister, Medical Statistics, 38.

- Thomas H. Sternberg et al., “Chapter 10: Venereal Diseases,” in Medical Department, United States Army in World War II, Preventive Medicine, Preventive Medicine in World War II Series, vol. V, Communicable Diseases: Transmitted Through Contact or By Unknown Means (Washington, D.C.: Office of the Surgeon General, Department of the Army, 1960).

- Robert Bud, Penicillin: Triumph and Tragedy (Oxford: Oxford University Press, 2009); and Roswell Quinn, “Rethinking Antibiotic Research and Development: World War II and the Penicillin Collaborative,” American Journal of Public Health 103, no. 3 (2013): 426–34.

- Hans Pols, “War and Military Mental Health,” American Journal of Public Health 97, no. 12 (2007): 2132–42.

- Edgar Jones and Simon Wessely, “Forward Psychiatry in the Military: Its Origins and Effectiveness,” Journal of Traumatic Stress 16, no. 4 (2003): 411–19.

- The “normal mind” from Pols, “War and Military Mental Health.” Also, see Eric T. Dean, Shook over Hell: Post-Traumatic Stress, Vietnam, and the Civil War (Cambridge, MA: Harvard University Press, 1999); Allan V. Horwitz, PTSD: A Short History (Baltimore: Johns Hopkins University Press, 2018); Allan Young, The Harmony of Illusions: Inventing Post-Traumatic Stress Disorder (Princeton: Princeton University Press, 1997); and Robert S. Anderson et al., and William S. Mullins et al. Neuropsychiatry in World War II, vol. 1 and 2 (Washington, D.C.: Office of the Surgeon General, Department of the Army, 1966–1973). Leo H. Bartemeier et al., “Combat Exhaustion” The Journal of Nervous and Mental Disease 104, no. 4 (1946): 358–89.

SUGGESTED CITATION: Adler, Jessica L. “Medical Care & Mental Health.” The American Soldier in World War II. Edited by Edward J.K. Gitre. Virginia Tech, 2021. https://americansoldierww2.org/topics/medical-care-and-mental-health. Accessed [insert date].

COVER IMAGE: “This is Ann… she drinks blood!” Newsmap 2, no. 29 (8 November 1943). Army Orientation Course, Special Service Division, Army Service Forces, War Department. Courtesy of NARA, 26-NM-2-29b, NAID 66395204.